Mapping the 180k IPs of git repo crawlers

For the past few years I have been running a Gitea instance at code.bitgloo.com where I host git repositories for personal and professional projects. In the past couple of weeks, my server (a poor toaster NAS) began to get slammed at this domain: traffic was coming from seemingly all over the place, requesting every URL beneath every public repo.

Others noticed this too, a new wave of web crawlers that love to devour code repositories -- something that AI training may be the culprit of:

- https://drewdevault.com/2025/03/17/2025-03-17-Stop-externalizing-your-costs-on-me.html

- https://status.sr.ht/issues/2025-03-17-git.sr.ht-llms/

- https://www.reddit.com/r/Futurology/comments/1jh4vch/cloudflare_turns_ai_against_itself_with_endless/

For myself, I took the opportunity to better learn Fail2ban and its filtering rules so I could block this traffic. The requests had some similarities, but were largely unique which made them near impossible to reasonably capture:

- Requests would come from unique IPs with one IP rarely sending more than one or two GETs

- User agents of nearly every possible combination of hardware and web browsers from the past two decades.

- All requests were for listings of repo issues, pull requests, commit histories, or git blames.

- These requests were direct, without any crawling leading to or following the list queries.

An aside on the user agents

Take a look at these for yourself! I discovered goaccess which can visualize NGINX's log data.

...Really, crawlers? I'm getting traffic from Windows NT, iPod Touch's, and Android Froyo? I do enjoy retro tech, but I'm still feeling skeptical.

Hits h% Vis. v% Tx. Amount Data

----- ------ ----- ------ ---------- ----

56319 43.91% 18378 44.19% 34.05 MiB Windows ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

9595 7.48% 2826 6.80% 6.17 MiB ├─ Windows 10 ||||||||||||||||||||

9247 7.21% 3067 7.38% 5.50 MiB ├─ Windows 2000 |||||||||||||||||||

6305 4.92% 2130 5.12% 3.84 MiB ├─ Windows XP |||||||||||||

4465 3.48% 1510 3.63% 2.62 MiB ├─ Windows 7 |||||||||

4422 3.45% 1484 3.57% 2.69 MiB ├─ Windows Vista |||||||||

3967 3.09% 1316 3.16% 2.36 MiB ├─ Windows 8 ||||||||

3892 3.03% 1279 3.08% 2.33 MiB ├─ Windows XP x64 ||||||||

2406 1.88% 795 1.91% 1.44 MiB ├─ Windows 98 |||||

2402 1.87% 786 1.89% 1.43 MiB ├─ Windows CE |||||

2375 1.85% 768 1.85% 1.42 MiB ├─ Windows NT |||||

2374 1.85% 794 1.91% 1.39 MiB ├─ Win 9x 4.90 |||||

2343 1.83% 790 1.90% 1.37 MiB ├─ Windows NT 4.0 ||||

23113 18.02% 7604 18.29% 14.13 MiB Linux |||||||||||||||||||||||||||||||||||||||||||||||||

23094 18.00% 7585 18.24% 14.03 MiB ├─ Linux |||||||||||||||||||||||||||||||||||||||||||||||||

17983 14.02% 6038 14.52% 9.36 MiB iOS ||||||||||||||||||||||||||||||||||||||

1091 0.85% 377 0.91% 573.16 KiB ├─ iPod OS 3.3 ||

1077 0.84% 337 0.81% 590.63 KiB ├─ iPod OS 3.0 ||

1062 0.83% 388 0.93% 540.07 KiB ├─ iPod OS 4.2 ||

194 0.15% 59 0.14% 107.26 KiB ├─ iPad OS 10.3.3 |

181 0.14% 62 0.15% 97.30 KiB ├─ iPhone OS 12.4.8 |

178 0.14% 52 0.13% 98.32 KiB ├─ iPhone OS 7.1.2 |

9966 7.77% 3361 8.08% 5.83 MiB Android |||||||||||||||||||||

2553 1.99% 849 2.04% 1.50 MiB ├─ Honeycomb 3 |||||

788 0.61% 261 0.63% 476.28 KiB ├─ KitKat 4.4 |

739 0.58% 275 0.66% 416.13 KiB ├─ Ice Cream Sandwich 4.0 |

430 0.34% 153 0.37% 244.07 KiB ├─ Froyo 2.2 |

1356 1.06% 83 0.20% 2.34 MiB Crawlers ||

My "nuclear" option

An open-source project spawned (or at least grew rapidly) from this: Anubis. Anubis requires "proof of work" to slow down crawlers and seems to be a fairly promising remedy. It's available as a Docker image which would be new territory for me; the setup instructions are not too friendly for an inexperienced user either.

So, I chose to switch git web servers instead. Goodbye Gitea, hello cgit and gitolite! This actually took a while to set up between SSH access, repo management, and file permissions, but overall I'm happy with the interface. I can also be without issue, PR, and blame pages, so any more requests for those would surely be bots.

Following this, I set up a strict Fail2ban filter that would ban access to those no-longer-existing pages for 100 days. See the filter definition below. The list of 4xx error codes came from another filter, though I added code 499: this is NGINX specific and would show up when the server got overwhelmed to the point of failing to respond to every client request in time. I decided that should be a red flag too.

[Definition]

failregex = ^<HOST> \- .* \"GET .*\/commits\/.*\" (401|404|444|403|400|499)

^<HOST> \- .* \"GET .*\/blame\/.*\" (401|404|444|403|400|499)

^<HOST> \- .* \"GET .*\/pulls.*\" (401|404|444|403|400|499)

^<HOST> \- .* \"GET .*\/issues.*\" (401|404|444|403|400|499)

ignoreregex =

Too many IPs

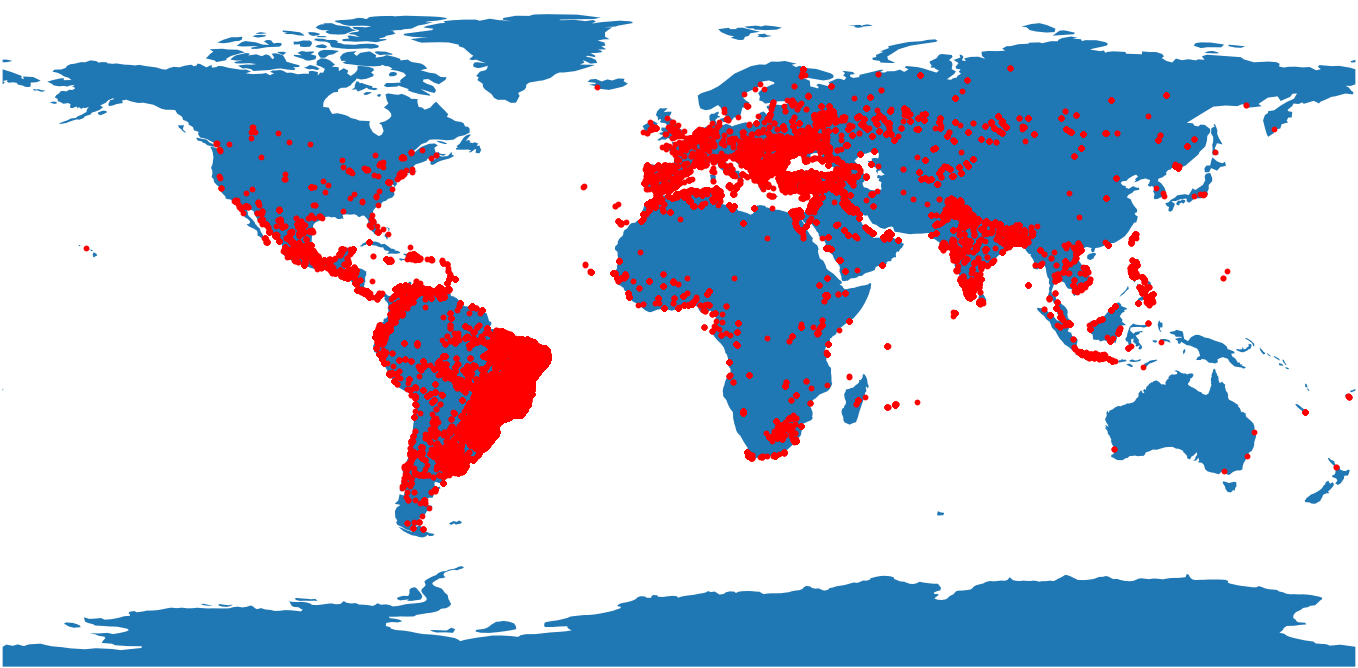

After 36 hours, the filter had collected over 180,000 unique IPs that were making these very suspicous requests. 180k IPs, only to code.bitgloo.com, only for these four types of pages. Crazy! With this massive list, I wanted to see from a world view where all this traffic was coming from. How widespread are these bots and crawlers?

Conveniently, a service called MaxMind provides a freely downloadable database of IP addresses and their rough geographical locations (account creation required). A Python library takes in an IP and simply spits out the location data.

This can be paired with some other libraries, geopandas, geodatasets, and matplotlib, to produce a worldwide map of the IPs.

Here's the result (183,018 IPs here):

These are all over the place, but it is interesting how a few areas stick out: Mexico, Brazil, Europe, and India. Would one expect more from China or the USA? Could it be less expensive (or maybe more unexpected?) to run crawlers from these areas?

Are these truly dedicated bots and/or crawlers, or could there be hijacking of residential IPs that would make banning all of this impractical?

I hope an answer or a practical solution comes out soon. For little bitgloo.com, I'm still comfortable to leave the IP ban list in place, but I'd hate to box out any non-bot viewers. There's also the chance that I'll be slammed again once the bots decide to farm cgit instances...

Python script

Here's the map generator script if you're interested, using the GeoLite2 City database from MaxMind and a newline-delimited text file of IP addresses:

#!/usr/bin/python3

from shapely.geometry import Point

import geopandas as gpd

from geopandas import GeoDataFrame

import geodatasets

import geoip2.database

import matplotlib.pyplot as plt

geometry = []

with geoip2.database.Reader('GeoLite2-City_20250325/GeoLite2-City.mmdb') as reader:

with open('ips.txt') as file:

for line in file:

ip = line.strip()

try:

response = reader.city(ip)

xy = Point(response.location.longitude, response.location.latitude)

geometry.append(xy)

except:

print(ip, 'not locatable!')

print(len(geometry), 'IPs located.')

gdf = GeoDataFrame(geometry=geometry)

world = gpd.read_file(geodatasets.data.naturalearth.land['url'])

gdf.plot(ax=world.plot(figsize=(10, 6)), marker='o', color='red', markersize=10);

plt.show()