I2S master emulation with SPI peripheral

Background

My journey to realize ultra-low-power audio sensing has been based around I2S MEMS microphones and ESP32 and STM32 microcontrollers. I first developed a more efficient A-weighting filter while I transistioned to the STM32 family, a choice made since ESP32 simply cannot do low-power data acquisition.

The next step is optimizing the hardware. The STM32 L family of microcontrollers is best suited for low-power applications; I began with STM32L4 in particular since I already had one on-hand.

STM32L4 is based on the ARM Cortex-M4F core that provides a hardware floating-point unit and support for a DSP instruction set, leading to an easy port of the ESP32 code (and then of my custom filter). Power usage dropped to the milliwatt range at the cost of component price: an entire ESP32 module is far less expensive than any STM32L4 processor.

I decided the solution to be an STM32L0 processor that can compete on price while maintaining low-power specs. It's Cortex-M0+ core can fortunately execute fast enough given a switch to fixed-point calculations, but the L0's other loss is that of an I2S peripheral.

I2S emulation

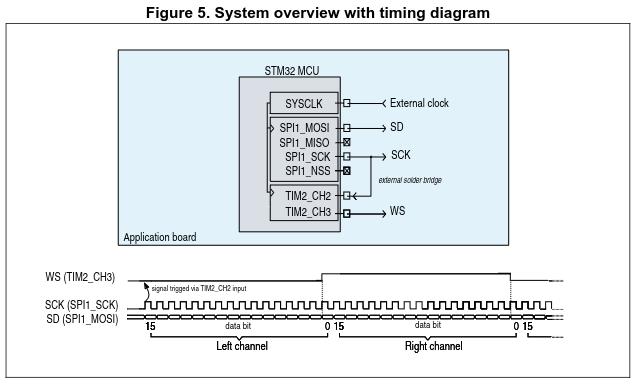

ST gives us a solution to the lacking hardware through an application note (pdf link) which uses SPI and a timer peripheral to re-create the I2S protocol. SPI's SCK and MOSI/MISO pins are used for the master clock and data I/O, while the timer follows SPI's clock to toggle a pin for the "WS" signal that frames the left and right audio channel data.

The app note is not entirely clear on how to configure the peripherals for this joint operation, and I didn't want to overcomplicate what seemed to be a more simple problem -- at least in my case with the STM32 driving and sampling a single I2S microphone.

Ditching the timer

Since the SPI peripheral is already clocking the microphone and receiving over MISO, why can't it also send out the WS frame over MOSI? There's nothing I can find wrong with this idea, though a primary issue becomes prevalent when working with a HAL: practically all SPI drivers offer either transmit-only, receive-only, or transmit-receive routines, where simultaneous transmission and reception requires input and output buffers of the same size. If I want to receive kilobytes of I2S data, I would need an equal amount of kilobytes dedicated to the repeating WS frame.

By the way, the WS frame is very simple: four bytes (i.e. 32 bits) of zeros for one audio channel (usually the left I believe), then four bytes of 0xFF for the other. This pattern would repeat throughout the transmit buffer, and to retrieve the microphone data we would only consider data where WS is "low".

Bringing in DMA

Driving the SPI peripheral over DMA allows the microcontroller's core to sleep while data is transferred, minimizing power consumption. The DMA peripheral can also operate in a "circular" mode to continuously collect data, waking the processor only when a half-buffer is ready to be processed (while the other half begins to fill).

ST's HAL can implement DMA for the transmit-receive operation, but again the two buffers it uses need to be the same size.

Why?

Because...

The DMA channels used for transmission and reception are configured separately: separate source and destination addresses, separate buffers, and separate buffer sizes. Of course there are very limited applications where separate transmit and receive buffer sizes would make sense, but for I2S this just happens to be the case. The receive buffer can be as large as it wants, but the transmitted WS frame can be kept to its eight-byte size.

The solution

I would share the actual code here, but it is highly dependent on your MCU and HAL. Here's the gist of it:

- Pull the "transmit-receive with DMA" function out of ST's HAL.

- Give it an additional argument for TX buffer size.

- Define the 8-byte WS frame buffer and pass that to this new function, along with an RX buffer as large as you'd like.

The audio processing will hook off of receive channel's interrupts, so there is no worry (or need) for the transmit channel's interrupts.

So, now we can continuously capture and process I2S audio with just a few milliwatts of power. To give an example of what our little microcontroller can achieve here, my application is calculating A-weighted decibel readings sampled at 48 kHz. The processor's utilization in this case is only around 60%. It would be interesting to see how well this performs on a battery, especially since the MCU and microphone can run at just 1.8V; maybe this could survive on a small solar cell too...